In the realm of machine learning, clustering stands as a powerful unsupervised learning technique that unveils hidden patterns within datasets. From customer segmentation to image analysis, clustering algorithms group data points together based on similarities, offering insights into complex datasets without the need for labeled examples.

What is Clustering?

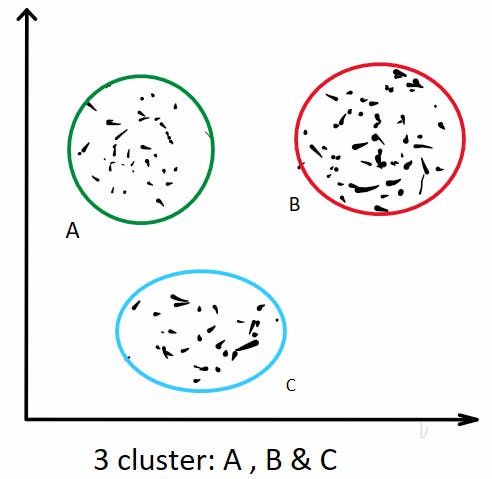

Clustering is a form of unsupervised learning where algorithms automatically group data points into distinct clusters based on their inherent similarities. Unlike supervised learning, which relies on labeled data, clustering algorithms explore the underlying structure of data without predefined categories.

How Does Clustering Work?

Let's delve into the mechanics of clustering using a simple example:

Example: Customer Segmentation in E-commerce

Consider an e-commerce platform collecting data on customer purchasing behavior. Each customer's data includes attributes such as age, location, purchase history, and preferences.

1. Data Representation: The dataset contains rows representing individual customers and columns representing attributes like age, location, and purchase history.

2. Similarity Measure: Clustering algorithms employ similarity measures to quantify how alike or different data points are. For instance, the Euclidean distance metric can measure the distance between two customers based on their attributes.

3. Cluster Assignment: Initially, the algorithm may assign data points randomly or based on certain criteria. As it iterates, it reassigns data points to clusters based on their similarity to cluster centroids.

4. Cluster Representation: Each cluster is represented by a centroid, computed as the mean or median of the data points within the cluster. The centroid serves as the cluster's prototype.

5. Evaluation: Clustering algorithms are evaluated based on criteria such as intra-cluster cohesion and inter-cluster separation. Cohesion measures how closely data points within a cluster resemble each other, while separation assesses the dissimilarity between clusters.

Types of Clustering

- Hierarchical Clustering: Builds a tree of clusters by recursively merging or splitting based on similarity.

- Non-Hierarchical Clustering: K-Means is a common type of non-hierarchical clustering. In K-Means, data points are split into 'k' groups by reducing the total distance within each group

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): Groups densely packed data points together.

Real-World Applications

Clustering finds applications across diverse domains:

- Marketing: Segmenting customers based on purchasing behavior for targeted marketing campaigns.

- Image Processing: Grouping pixels with similar characteristics for image segmentation tasks.

- Anomaly Detection: Identifying unusual patterns in data, such as fraudulent transactions in finance.

- Recommendation Systems: Grouping users with similar preferences to make personalized recommendations.

Conclusion

Clustering is a versatile tool that uncovers hidden structures within data, enabling deeper insights and informed decision-making across various industries. By understanding the mechanics and applications of clustering algorithms, businesses can extract valuable insights and drive innovation in a data-driven world.

Happy clustering!